Why I Needed A Docker Container That Does Nothing.

If you haven't been following gaming news recently, you may have missed that the coop-base-builder-exploration-game du jour is currently Palworld, which has been aptly described as "Pokémon with guns."

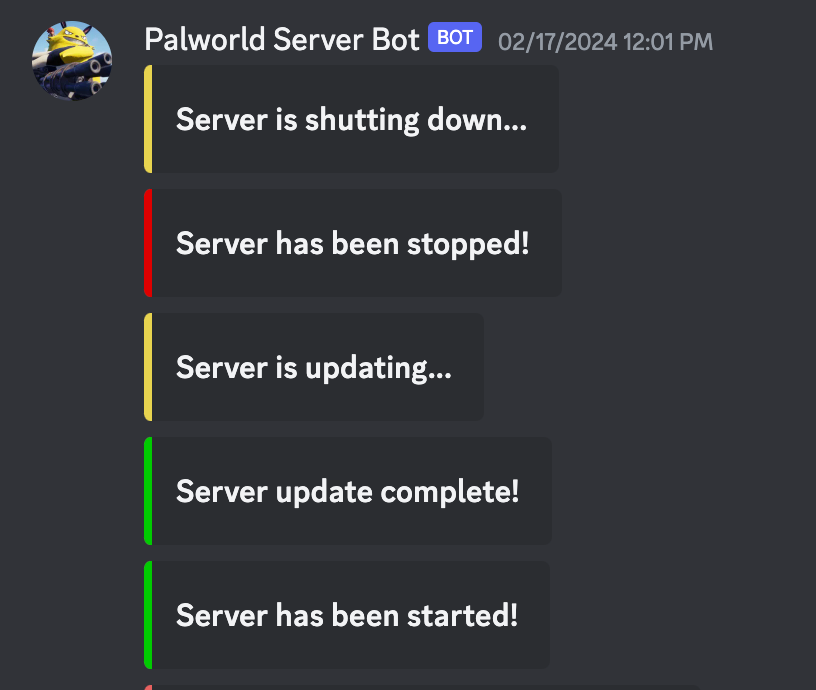

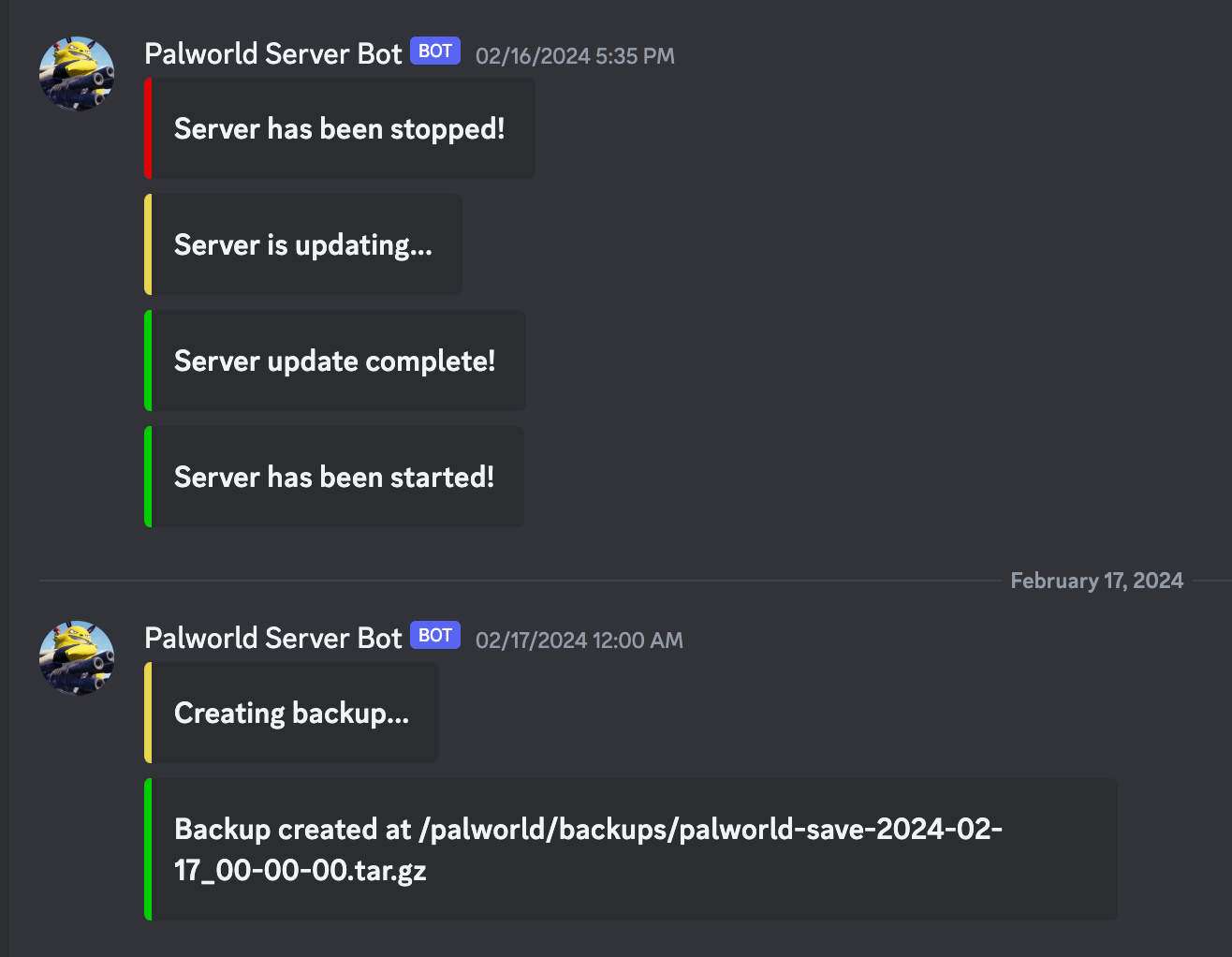

My friends and I have been playing too, and I've been self-hosting a server for us using Docker and Docker Compose. The Docker container image I was using is excellent (link below) and supports sending Discord webhooks whenever the server starts up or shuts down, which is surprisingly critical because the game server gets sluggish if you don't reboot it once a day or so.

This is what they look like:

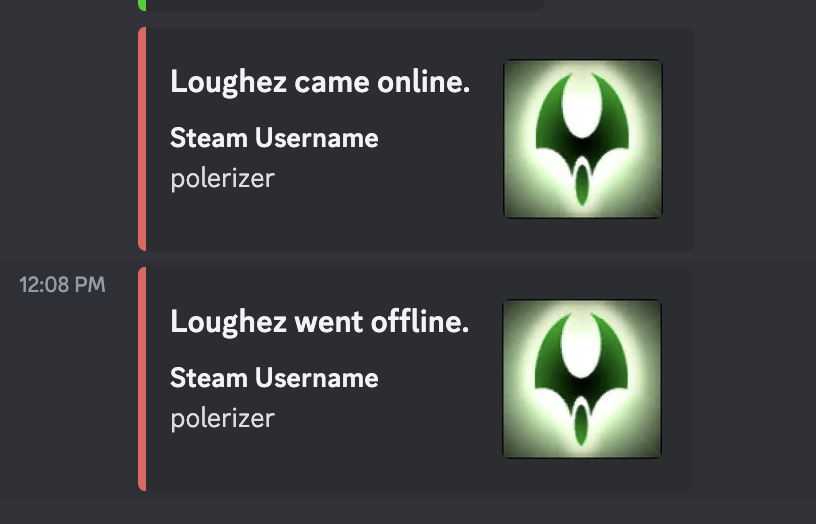

I wanted to expand on this to message the Discord whenever players joined or left the server, so folks could see who's online and feel tempted to hop in. I threw something together in Go that uses the linux conntrack module and Palworld's rcon server to generate join/leave events to send to Discord, and this is what they ended up looking like:

What is Conntrack?

The conntrack module tracks network connections to the system and is usually used to specify firewall rules that allow packets to reach the system if they're related to a different connection that is already allowed. For example, your firewall may be configured to not allow any incoming traffic if your computer isn't hosting anything. However, when you browse the web and connect to an HTTP server, you've gotta somehow get packets back from the server. That's where conntrack comes in: the outbound flow that your HTTP request creates is allowed by the firewall, then conntrack makes a note of that connection and records an entry saying "allow incoming packets that correspond to this connection", and finally, when the HTTP server sends a response, the inbound flow will match the expected flow that conntrack recorded and the firewall lets it through.

That's what conntrack is mostly used for, but it does have one other cool trick: it can dispatch an event every time a connection changes (e.g. a new connection is created, or an existing one closes). The events look something like this:

server-manager-1 | Event[NEW](UDP) 1.2.3.4:[55533] - localhost(192.168.240.2):[8211] ([CONFIRMED, UN-REPLIED])

server-manager-1 | Event[UPDATE](UDP) 1.2.3.4:[55533] - localhost(192.168.240.2):[8211] ([REPLIED])

server-manager-1 | Event[UPDATE](UDP) 1.2.3.4:[55533] - localhost(192.168.240.2):[8211] ([ASSURED])

server-manager-1 | Event[DETROY](UDP) 1.2.3.4:[55533] - localhost(192.168.240.2):[8211] ([ASSURED][DYING])

In the example above, we can see a connection from a client (1.2.3.4:55533) to the server (192.168.240.2:8211):

- First we get a

NEWevent showing that we got a UDP packet in this flow which made it past the firewall (CONFIRMED) but the server didn't respond yet (UNREPLIED). - Next, we get an update event showing that the server replied (

REPLIED). - Then, we get another update event showing that the client sent a response in reply to the server's message (

ASSURED). This is analogous to TCP's handshake where the initiator sends aSYNpacket, the receiver responds with aSYNACK, and then the initiator responds with anACKand can start sending data. UDP doesn't have a handshake because it's not a stateful protocol, butconntrackprovides these events anyway since they're broadly useful in determining when to count a connection as "active". Since Palworld presumably has a connection handshake between the client and the server, theASSUREDstate is good for us. - Finally, we get a destroy event when the client has disconnected (

DYING). Since UDP doesn't have an explicit "hangup" message like TCP'sRSTpacket,conntrackmarks a UDP connection as dead if it hasn't received a packet for some amount of time (on my system, it's 180 seconds).

Why does that make the networking hard?

conntrack only tracks connections to the local system, e.g. a VM or a container. It can't track connections going to a different container. This is because linux network isolation is done with namespaces (net-ns), and each Docker container, by default, has its own separate network namespace. This means that if two containers both listen on the same port (say, port 80), they can both bind to that port because they're doing it in their own namespaces. To get outside traffic to reach a container's port, it needs to be forwarded, hence why Docker supports forwarding ports from the host to a container (e.g. docker run ... -p 8000:80).

My server manager program in Go needed to be running in the same net-ns as the Palworld server, but they were running in different containers within the Docker Compose stack. I could make a new container image that ran both my server manager and the default command for the Palworld server, but that would require a more complex integration and would couple the two components together. This is roughly what the docker-compose.yaml looked like at this point:

services:

palworld:

image: thijsvanloef/palworld-server-docker:latest

restart: unless-stopped

ports:

- 8211:8211/udp

- 27015:27015/udp

volumes:

- ./data:/palworld/

server-manager:

image: golang:latest

restart: unless-stopped

depends_on:

- palworld

command: "/src/server-manager"

cap_add:

- NET_ADMIN

volumes:

- ./src:/src

docker-compose.yaml: I was too lazy to build the Go binary into its own Docker image.

Now, unlike in Docker Compose, when you deploy a group of containers together in Kubernetes, you can deploy them as a "pod", which puts them all in the same net-ns. Docker Compose creates a unique net-ns for each container you define and creates a new virtual private network for them, so they can communicate with each other, but they each have their own network stack. If this were a Kubernetes pod, then server-manager would be able to monitor connections to palworld:8211, but not so in Docker Compose.

The I discovered this flag: --network container:<container-name>. It's a container networking option that's a bit buried in the docs, but when you specify network: container:<container-name> in your Docker Compose service definition, Docker will put the container you're creating into the net-ns of the container you specified.

This kinda emulates a Kubernetes pod. Let's see the new docker-compose.yaml after this update:

services:

palworld:

image: thijsvanloef/palworld-server-docker:latest

restart: unless-stopped

ports:

- 8211:8211/udp

- 27015:27015/udp

volumes:

- ./data:/palworld/

server-manager:

image: golang:latest

restart: unless-stopped

depends_on:

- palworld

network: container:palworld # <-- This line right here

command: "/src/server-manager"

cap_add:

- NET_ADMIN

volumes:

- ./src:/src

docker-compose.yaml but with server-manager and palworld in the same net-ns.

And then there were more problems...

Putting server-manager in palworld's net-ns worked well for a while: server-manager was able to use conntrack to monitor UDP connections to the Palworld game server port. However, after about a day, I'd suddenly stop getting messages when people joined and left unless I restarted server-manager manually. Suspiciously, it would also fail to connect to the rcon server the game binary exposed on 127.0.0.1:25575 even though I could still connect to it from other containers in the same stack (that I didn't include the docker-compose.yaml examples above).

Remember when I said that the server needed to be rebooted regularly? I noticed in the logs that this all started happening after it rebooted. The server status messages in the screenshot above come from the palworld container. When its scheduled restart time comes, it shuts down the server which causes the Docker container to exit. Because the service has restart: unless-stopped in its definition, Docker will then automatically recreate the container.

Turns out, when this happens, the new palworld container gets a new net-ns, but since the server-manager container hasn't restarted, it doesn't get connected to the new palworld container's net-ns: it's orphaned on the net-ns of the container that shut down. Thus, conntrack doesn't get any new connection events, and rcon can't reach the server on 127.0.0.1 (localhost).

If I wanted to keep the palworld container separate from the server-manager container so that they could be restarted or updated independently, I'd need to find a way to keep both of them in the same net-ns even if one or the other restarted.

Much ado about doing nothing.

So my galaxy-brain plan was this:

- Add a third container to the

docker-compose.yamlthat would not restart. - Connect both the

palworldcontainer and theserver-managercontainer to that container'snet-ns. - Now, whenever

palworldorserver-managerrestarts, they'd get connected back to the samenet-ns.

I needed a container to do nothing, but in a reliable, easy-to-understand way. There are hacks for doing this using a shell, but it seemed like a pretty heavy way to do it. The best named shell-hack I found is named cat-abuse: you run cat, which will listen for input from stdin until it reaches an EOF marker, which will never happen if you don't attach to the container or otherwise feed it input, so it'll wait forever. I've been learning Go recently since a friend was passionate about teaching me, so I figured let's give that a try.

Enter, the world's most useless Go program:

package main

import (

"log"

"os"

"os/signal"

)

func main() {

log.Printf("Waiting forever. Ctrl + C to interrupt...\n")

sigChan := make(chan os.Signal, 1)

signal.Notify(sigChan, os.Interrupt)

<-sigChan

log.Printf("Got SIGINT, exiting.\n")

}

This program registers a handler for SIGINT, which is the signal sent to the process when you type Ctrl + C in a shell. It creates a Go channel that will receive a message when the program receives a SIGINT, then it waits until it receives that message. It blocks until it does, so unless you explicitly send a SIGINT to the container, it'll wait "forever".

Technically, the signal handler isn't even necessary. You could block forever waiting for a message on a channel that no one will ever send to. You don't need to pass it to a handler that could conceivably send a message. I just hooked up SIGINT to make it more explicit that it's not supposed to stop waiting unless the program stops. You could simplify it, but I think it's less elegant.

package main

func main() {

sigChan := make(chan struct{})

<-sigChan

}In Go, the empty struct (struct{}) takes up no space and is used as a dummy type when you need a type that doesn't store any data.

Next, I added a Dockerfile to build the image, in as lean a way as possible:

FROM golang:1.22.0

COPY ./src /src

WORKDIR /src

RUN go build -o /bin/wait-forever ./main.go

FROM scratch

COPY --from=0 /bin/wait-forever /bin/wait-forever

CMD ["/bin/wait-forever"]

Dockerfile: This is a two-stage build. First, a container with the golang build chain compiles the source code, then we copy just the binary to a new distroless container (FROM scratch). This saves space since there are no other binaries in the final container.

With that in place, I could update the docker-compose.yaml:

services:

wait-forever:

build:

context: ./wait-forever

ports:

# Forwarding for Palworld server b/c this container owns the netns

- 8211:8211/udp

- 27015:27015/udp

palworld:

image: thijsvanloef/palworld-server-docker:latest

restart: unless-stopped

network: container:wait-forever # <-- Connected to wait-forever

# Note how the port forwarding got moved to wait-forever

# ports:

# - 8211:8211/udp

# - 27015:27015/udp

volumes:

- ./data:/palworld/

server-manager:

image: golang:latest

restart: unless-stopped

depends_on:

- palworld

network: container:wait-forever # <-- Connected to wait-forever

command: "/src/server-manager"

cap_add:

- NET_ADMIN

volumes:

- ./src:/src

docker-compose.yaml with wait-forever, palworld, and server-manager

Notice how the the palworld container no longer contains the ports: map that forwards ports from the host to the container. Because it has network: container:wait-forever, it's not allowed to forward ports. That has to be done on the container that owns the net-ns, which would be wait-forever.

With that in place, the server has been running for about a week without issues. server-manager pings the Discord even if the palworld server restarts, and I can start and stop them separately (e.g. to update server-manager).

I'd love to dig into how server-manager works, but that will probably have to wait for another time. Similarly, I'd put the code up, but it's not mature enough to share (or to collaborate with other folks on), so for now, I hope this post helps anyone who needs to make Docker Compose act a bit more like Kubernetes.